Understanding How AI Learns from Data and Improves Over Time Without Explicit Programming

Introduction

Artificial Intelligence (AI), particularly through Machine Learning (ML), has the remarkable ability to learn from data and improve its performance over time without being explicitly programmed for each specific task. This is a fundamental shift from traditional programming, where developers write detailed instructions for every possible scenario.

How Does AI Learn from Data?

1. Machine Learning Basics

- Definition: Machine Learning is a subset of AI that enables computers to learn from data and make decisions or predictions based on that data.

- Learning from Examples: Instead of being told exactly what to do, ML algorithms identify patterns within large datasets.

2. Training Models: There are 3 different training models in ML

- Data Input: AI models are provided with training data that includes inputs and the desired outputs.

- Learning Process: The model adjusts its internal parameters to minimize the difference between its predictions and the actual results.

- Iteration: This process is repeated many times, allowing the model to improve its accuracy.

3. Generalization

- Applying Knowledge: After training, the model can apply what it has learned to new, unseen data.

- Adaptability: It can handle variations and nuances without needing new code for each specific case.

Why Doesn't AI Need Explicit Programming for Each Task?

Traditional Programming vs. Machine Learning

- Traditional Programming: Requires explicit instructions for every possible input or condition. For complex tasks, this can become incredibly cumbersome or even impossible.

- Machine Learning: The model learns the underlying patterns and rules from the data itself, eliminating the need to program each scenario manually.

Example: Email Spam Filtering

- Traditional Approach: Write specific rules to filter spam based on known spam phrases or senders.

- Machine Learning Approach:

- Training: Provide the model with examples of spam and non-spam emails.

- Learning: The model identifies patterns that are common in spam emails.

- Prediction: It can then evaluate new emails and predict whether they are spam based on learned patterns.

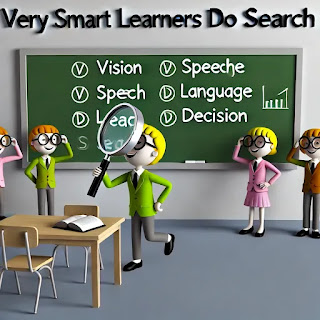

Types of Learning in AI

1. Supervised Learning

- Definition: The model is trained on labeled data, meaning each training example is paired with an output label.

- Purpose: To predict outcomes based on input data.

- Example: Predicting house prices based on features like size, location, and age.

2. Unsupervised Learning

- Definition: The model learns from unlabeled data, identifying inherent patterns or groupings.

- Purpose: To find structure within data.

- Example: Grouping customers into segments based on purchasing behavior.

3. Reinforcement Learning

- Definition: The model learns by interacting with an environment and receiving feedback in the form of rewards or penalties.

- Purpose: To make a sequence of decisions that maximize cumulative rewards.

- Example: Training a robot to navigate a maze.

Improving Over Time

- Continuous Learning: Models can be retrained with new data to improve their accuracy.

- Feedback Mechanisms: User interactions can provide additional data to refine the model.

- Avoiding Overfitting: Techniques like cross-validation ensure the model doesn't just memorize the training data but generalizes well to new data.

Real-World Example: Voice Recognition

Problem

Developing software that can accurately convert spoken language into text across different accents and dialects.

Traditional Programming Challenges

- Complexity: Manually coding rules for every pronunciation and accent is impractical.

- Limitations: Can't adapt to new words or slang without additional programming.

Machine Learning Solution

- Data Collection: Gather large datasets of spoken language with corresponding text transcripts.

- Training: Use this data to train a speech recognition model.

- Adaptation: The model learns to recognize patterns in speech sounds and associate them with words.

- Improvement: Over time, as more data is collected (including user corrections), the model becomes more accurate.

Benefits of Learning from Data

- Scalability: Can handle vast amounts of data and complex tasks.

- Adaptability: Adjusts to new information without the need for manual code changes.

- Efficiency: Reduces development time since not every scenario needs explicit programming.

Conclusion

AI's ability to learn from data and improve over time is what makes technologies like recommendation systems, autonomous vehicles, and language translators possible. By processing large amounts of data, AI models discern patterns and make informed decisions or predictions, all without the need for explicit instructions for every possible situation.

In Simple Terms:

Think of AI like a student learning to solve math problems. Instead of memorizing the answer to every possible problem, the student learns the underlying principles and methods. This way, when faced with a new problem, they can apply what they've learned to find the solution, even if they've never seen that exact problem before.

,%20performing%20tricks%20while%20other%20dogs%20represent%20Deep%20Learning%20(Dog.webp)