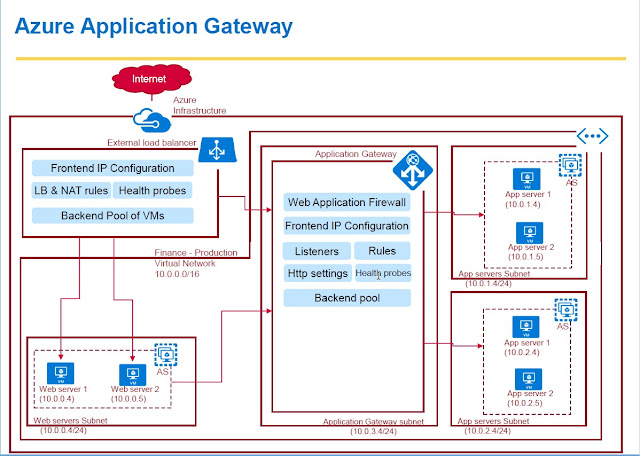

Different Components of Application Gateway

Application gateway and its different capabilities.

Application gateway offers layer 7 load balancing capabilities for HTTP and HTTPs traffic.

and when you compare the application gateway with the

load balancer, Load balancer offers

layer 4 load balancing capabilities.

Whereas application gateway offers

layer 7 load balancing capability.

However the load balancer can distribute different type of traffic whereas application gateway can distribute only HTTP and HTTPs traffic.

And one other difference between External load balancer and application gateway is application gateway always resides within the virtual network whereas load balancer you can choose whether it should be inside virtual network or outside virtual network.

And in terms of components of application gateway,

1.FrontEnd IP Configuration --> Application gateway has a frontend IP configuration.

They are basically IP addresses to which the traffic will come to.

2.Backend pool ---> which basically contains pool of IP addresses where the traffic

will be destined to.

3.listeners--> Listeners are basically listens to the traffic that is coming to a particular port.

In this case either 80 or 443 for HTTPs traffic and rules are something that will map this listeners to the backend pool. So it will basically map the incoming traffic to a particular destination pool

4.Health probe--> which will basically monitor the health of the backend pool machines

5.HTTP settings which will define whether we should use cookie based session affinity or which port in the backend pool that the traffic needs to be routed to and all those stuff.

6.web application firewall which can be used to protect your web application from some

common web attacks.

So these are the components of application gateway.

Let's go through some of the capabilities of application gateway.

In terms of capabilities,

Capabilities

- HTTPS Load Balancing -- It can load balance HTTP or HTTPs traffic

- Web Application Firewall -- web application firewall to protect your web application against common web attacks

- Cookie based Session affinity -- you can use cookie based session infinity in order to route all the user session traffic to a particular backend server throughout the user session.

- SSL offload-- If you want to offload the SSL traffic at the application gateway level you can configure the application gateway to achieve it.

- URL based content routing->If you want to route your traffic based on the URL then you'll be able to do the same using application gateway.

- Multi-site routing -> if you want to host multiple sites on a single public IP address you can achieve the same using application gateway. Basically you can configure the application gateway in such a way based on the domain name.It will route the traffic to a particular backend pool.

- Health monitoring you can monitor the health of your backend virtual machines by configuring a health probe in application gateway.

So these are the different capabilities of application gateway.

Autoscaling public preview

In addition to the features described in this article, Application Gateway also offers a public preview of a new SKU [Standard_V2], which offers auto scaling and other critical performance enhancements.

Autoscaling - Application Gateway or WAF deployments under the autoscaling SKU can scale up or down based on changing traffic load patterns. Autoscaling also removes the requirement to choose a deployment size or instance count during provisioning.

Zone redundancy - An Application Gateway or WAF deployment can span multiple Availability Zones, removing the need to provision and spin separate Application Gateway instances in each zone with a Traffic Manager.

Static VIP - The application gateway VIP now supports the static VIP type exclusively. This ensures that the VIP associated with application gateway does not change even after a restart.

Faster deployment and update time as compared to the generally available SKU.

5X better SSL offload performance as compared to the generally available SKU.

Demo

1.How to load balance HTTP traffic using Application Gateway.

https://docs.microsoft.com/en-us/azure/application-gateway/quick-create-portal

2.How to configure application gateway to achieve URL based content routing.

https://docs.microsoft.com/en-us/azure/application-gateway/application-gateway-create-url-route-portal

3.How to configure Application gateway for hosting multi site routing

4. How to Enable web application firewall on a Application Gateway and Simulate an Attack

to check whether your web application firewall is securing your web application against excesses attacks etc..

Scripts to configure Application gateway using Terraform <Coming soon>