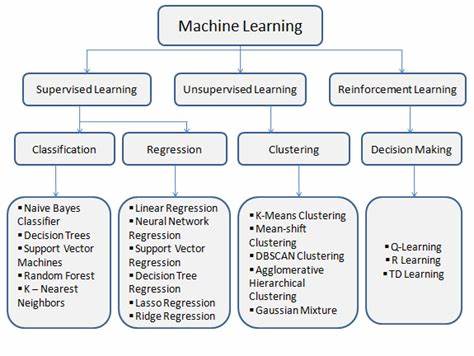

1. Supervised Learning

Supervised Learning involves training a model on labeled data, where the correct output is already known. It’s divided into Classification and Regression.

Classification:

- Key Algorithms:

- Naive Bayes Classifier

- Decision Trees

- Support Vector Machines (SVM)

- Neural Networks

- Random Forest

- K-Nearest Neighbors (KNN)

- Naive Bayes Classifier

- Decision Trees

- Support Vector Machines (SVM)

- Neural Networks

- Random Forest

- K-Nearest Neighbors (KNN)

Memory Technique:

- Naive Bayes: Think of a "naive" person making simple decisions based on just one factor (this represents the independence assumption in Naive Bayes).

- Decision Trees: Visualize a tree with branches where each split is a decision.

- Support Vector Machines (SVM): Imagine a vector cutting through the middle of two groups, creating the "support" for each side.

- Neural Networks: Picture a network of neurons in your brain making decisions.

- Random Forest: Imagine a forest with many different trees (multiple decision trees working together).

- K-Nearest Neighbors (KNN): Think of asking your nearest neighbors for their opinion to classify something.

Regression:

- Key Algorithms:

- Linear Regression

- Neural Network Regression

- Support Vector Regression (SVR)

- Decision Tree Regression

- Lasso Regression

- Ridge Regression

- Linear Regression

- Neural Network Regression

- Support Vector Regression (SVR)

- Decision Tree Regression

- Lasso Regression

- Ridge Regression

Memory Technique:

- Linear Regression: Picture a straight line fitting data points.

- Lasso and Ridge Regression: Think of a cowboy's lasso tightening around unnecessary data points (Lasso reduces complexity), while Ridge smooths out large coefficients.

2. Unsupervised Learning

Unsupervised Learning involves analyzing data that is not labeled. It mainly focuses on Clustering.

Clustering:

- Key Algorithms:

- K-Means Clustering

- Mean-shift Clustering

- DBSCAN Clustering

- Agglomerative Hierarchical Clustering

- Gaussian Mixture

- K-Means Clustering

- Mean-shift Clustering

- DBSCAN Clustering

- Agglomerative Hierarchical Clustering

- Gaussian Mixture

Memory Technique:

- K-Means Clustering: Visualize K groups (clusters) with "means" being the center of each.

- Mean-shift: Think of shifting the mean point towards the dense area of data.

- DBSCAN: Imagine scanning a dense cluster of stars (data points).

- Agglomerative Hierarchical Clustering: Picture building a family tree where each node joins closer relatives.

- Gaussian Mixture: Envision different bell curves (Gaussian distributions) overlapping to form a mixture.

3. Reinforcement Learning

Reinforcement Learning focuses on Decision Making through interactions with an environment, learning from the outcomes.

Decision Making:

- Key Algorithms:

- Q-Learning

- R-Learning

- TD Learning (Temporal Difference Learning)

- Q-Learning

- R-Learning

- TD Learning (Temporal Difference Learning)

Memory Technique:

- Q-Learning: Think of a "queue" of actions leading to rewards.

- R-Learning: Remember "R" for "Reward" and learning how to maximize it.

- TD Learning: Imagine time (Temporal) affecting decisions as you learn from the differences between expected and actual rewards.

Summary:

- Supervised Learning: "Teachers" (labels) guide the model (Classification and Regression).

- Unsupervised Learning: The model "explores" data without guidance (Clustering).

- Reinforcement Learning: The model "learns from experience" through rewards and penalties (Decision Making).

By associating each concept with a visual or simple analogy, you'll find it easier to recall the key points when studying AI and ML. These memory techniques can be particularly helpful when preparing for exams or applying these concepts to practical problems.

Supervised vs. Unsupervised Learning

- Supervised Learning: Involves training a model on a labeled dataset, where the correct output is known (e.g., predicting house prices based on features).

- Unsupervised Learning: Involves training a model on data without explicit labels, allowing the model to find patterns (e.g., clustering customers based on purchasing behavior).

Memory Technique:

- Think of a supervisor (teacher) guiding the learning process with answers, hence Supervised Learning.

- Unsupervised means no supervisor, so the model finds patterns on its own, like exploring an unsupervised playground.

Classification and regression are both types of supervised machine learning algorithms, but they serve different purposes and work with different types of data outputs.

2. Key Algorithms

- Linear Regression (Supervised, Regression): Predicts a continuous outcome based on the relationship between variables.

- Logistic Regression (Supervised, Classification): Predicts a categorical outcome (e.g., Yes/No) using probabilities.

Memory Technique:

- Linear Regression: Think of a straight line fitting data points.

- Logistic Regression: Imagine logistics deciding between two paths, hence classification into categories.

1. Type of Output:

Classification: The primary goal of classification is to predict a categorical label, which means the output variable is discrete. For example, classification tasks include predicting whether an email is spam or not (binary classification) or categorizing an image as a cat, dog, or bird (multi-class classification).

You can use the acronym "CLASS" to remember the differences:

- C: Categories (Classification = Categorical outputs)

- L: Labels (Classification = Predicting labels like Yes/No)

- A: Algorithms (Classification uses Logistic Regression, SVM)

- S: Segments (Classification segments data into predefined classes)

- S: Scores (Classification uses metrics like Accuracy, F1-Score)

Regression: Regression aims to predict a continuous numeric value. The output variable in regression is a real number. Examples include predicting house prices, stock prices, or the temperature on a given day.

For Regression, remember "NUM":

- N: Numeric (Regression = Predicting numeric values)

- U: Unbroken (Regression models continuous, unbroken data)

- M: Metrics (Regression uses MAE, MSE, R-squared)

2. Nature of the Problem:

Classification: Involves dividing the input data into predefined classes or categories. The model learns from the labeled training data and tries to classify new data points into one of the existing classes.

Regression: Involves modeling the relationship between the input features and the output variable. The model predicts a value that falls within a continuous range based on the input features.

3. Algorithms Used:

Classification: Common algorithms include

- Logistic Regression,

- Decision Trees,

- Random Forests,

- Support Vector Machines (SVMs), and

- Neural Networks.

Regression: Common algorithms include

- Linear Regression,

- Polynomial Regression,

- Ridge Regression,

- Lasso Regression, and

- Support Vector Regression (SVR).

4. Evaluation Metrics:

Classification: Evaluation metrics include accuracy, precision, recall, F1-score, ROC-AUC, and confusion matrix. These metrics help determine how well the model is classifying the data into the correct categories.

Regression: Evaluation metrics include Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and R-squared. These metrics assess how close the predicted values are to the actual values.

5. Examples:

Classification: Predicting whether a patient has a particular disease (Yes/No), detecting fraudulent transactions (Fraud/Not Fraud), or identifying the sentiment of a tweet (Positive/Negative/Neutral).

Regression: Predicting the sales revenue based on advertising spend, estimating the price of a used car based on its features, or forecasting future stock prices.

Summary:

Classification is used when the goal is to assign data into discrete categories or classes.

Regression is used when the goal is to predict a continuous, numeric outcome based on input data.

Understanding the difference between these two types of machine learning algorithms is crucial for selecting the right approach for your specific data and predictive modeling needs.

Key Facts & Formulas:

Type of Output:

- Classification: Discrete output (Categorical labels like Yes/No, Cat/Dog)

- Regression: Continuous output (Numeric values like House Prices, Temperatures)

Nature of the Problem:

- Classification: Divides data into predefined classes or categories.

- Regression: Models relationships to predict values within a continuous range.

Common Algorithms:

- Classification:

- Logistic Regression:

- Decision Trees, Random Forests

- Support Vector Machines (SVM)

- Neural Networks

- Regression:

- Linear Regression:

- Polynomial Regression, Ridge Regression

- Lasso Regression, Support Vector Regression (SVR)

- Classification:

Evaluation Metrics:

- Classification:

- Accuracy:

- Precision, Recall, F1-score, ROC-AUC

- Regression:

- Mean Absolute Error (MAE):

- Mean Squared Error (MSE):

- Root Mean Squared Error (RMSE)

- R-squared:

- Mean Absolute Error (MAE):

- Classification:

Examples:

- Classification: Spam detection (Spam/Not Spam), Disease diagnosis (Yes/No)

- Regression: Predicting sales revenue, forecasting temperatures

Memory Technique:

.

3. Evaluation Metrics

- Accuracy: The proportion of correct predictions out of all predictions (used in classification).

- Mean Squared Error (MSE): Measures the average squared difference between predicted and actual values (used in regression).

Memory Technique:

- Accuracy is like a score in an exam—how many answers you got right.

- MSE: Think of it as Mistakes Squared Everywhere—it captures how far off predictions are.

4. Neural Networks

- Neurons: The basic units of a neural network, inspired by the human brain.

- Layers: Composed of multiple neurons, including input, hidden, and output layers.

Memory Technique:

- Visualize a neural network like a network of friends (neurons), passing information (signals) through layers (social circles).

5. Overfitting vs. Underfitting

- Overfitting: The model is too complex and captures noise in the data, performing well on training but poorly on new data.

- Underfitting: The model is too simple and fails to capture the underlying patterns in the data.

Memory Technique:

- Overfitting: Imagine trying to memorize every detail for an exam—too much information leads to confusion (poor generalization).

- Underfitting: Imagine skimming through notes—too little information means missing key concepts (poor performance).

Q1.Which type machine learning algorithm predicts a numeric label associated with an item based on that item’s features?

Select only one answer.

A. classification

B. clustering

C. regression

D. unsupervised

No comments:

Post a Comment